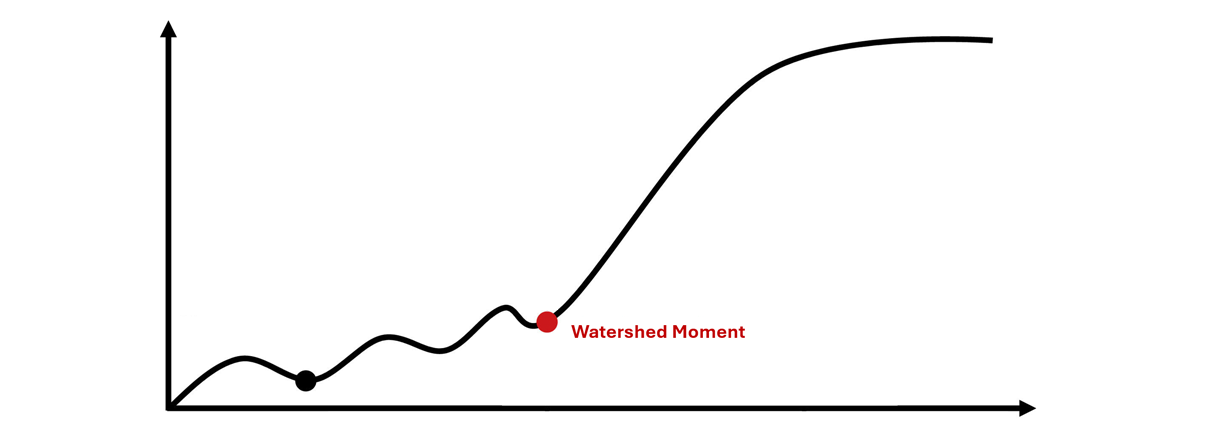

A Watershed Moment for Owning IP Moats in the AI Stack

On January 27th 2025, a relatively unknown Chinese startup called DeepSeek sent shockwaves through the US markets. Nvidia’s market capitalization dropped by more than half a trillion dollars—the largest single-day value loss for a public company in history.

DeepSeek, a Chinese artificial intelligence company developing open-source large language models (LLMs), demonstrated that they could create an LLM comparable to those of US tech giants, reportedly at a fraction of the cost and using less powerful GPUs due to Chinese export restrictions. [1]

The key question: Did investors in Nvidia, Broadcom, Microsoft, Constellation Energy, and others have legitimate reasons for concern?

To answer this, we need to consider two crucial aspects of DeepSeek’s announcement. First, they published a comprehensive whitepaper detailing their innovations in model training, which enabled more efficient and affordable LLM development. Second, they released their code as open-source subject to permissive license terms[2]. This combination means their innovations may have few protective moats.

Companies like Meta could potentially benefit from this development. Meta has already assigned four research teams to analyze DeepSeek’s innovations and apply the findings to their LLaMA model. Since both DeepSeek and LLaMA are both open-source, Meta may freely incorporate these innovations into LLaMA while leveraging more powerful GPUs that do not have the Chinese export restriction, e.g. Nvidia H100s.

Previously, investors assumed that the substantial capital requirements for model training—often tens of millions of dollars—created a natural economic barrier to entry. However, DeepSeek’s breakthrough demonstrates that human engineering and ingenuity remains a powerful force in AI development. If capital is no longer the primary barrier to entry in the LLM market, incumbent players (e.g. Open AI) may lack the inherent moats protecting their market position—hence the market’s dramatic reaction.

But why would making LLM training more efficient threaten US tech companies in AI, especially when DeepSeek published their code as open source?

Initially, investors feared that improving LLM training efficiency would reduce demand for chips and other computing resources. However, history suggests a different outcome through what’s known as Jevons Paradox. Named after William Stanley Jevons in 1865, this phenomenon shows that when technology becomes more efficient and affordable, it often leads to increased adoption and higher overall resource consumption. [3]

Just as more efficient steam engines ultimately increased coal demand through widespread adoption, DeepSeek’s innovations could make LLMs more accessible, spurring development of AI applications that use LLMs across various sectors like law, medicine, marketing, AI agents and many more. This broader adoption would likely increase demand for training, inference, and computing resources including the most powerful GPUs. [4]

Key Lessons

When DeepSeek published their innovations as open source code, they sacrificed proprietary advantages they may have had in the code and model training efficiencies. Their technology became available to study, use and improve upon—including those US companies with access to the most advanced hardware.

When DeepSeek published their innovations as open source code, they sacrificed proprietary advantages they may have had in the code and model training efficiencies. Their technology became available to study, use and improve upon—including those US companies with access to the most advanced hardware.

Had DeepSeek maintained their innovations proprietary and, for example, obtained patents, they might have secured valuable blocking rights against competitors. The recent market valuation losses might represent some portion of a rough approximation of such rights’ potential value. This market reaction highlights the importance of maintaining key technology proprietary and protecting it with intellectual property through patents, trade secrets, copyrights, and other means.

AI startups aiming to capitalize on their innovations should consider maintaining proprietary control by:

- Carefully evaluating whether to include their code in open-source releases

- Protecting key innovations with robust IP rights

- Focusing on products that provide demonstrable performance advantages, especially to bottlenecks

- Building strong IP moats around their technology

We believe human innovations will continue to rapidly evolve the entire AI stack, creating opportunities for smart AI startups to capture substantial market value through proper IP protection. Our firm advises and invests in these innovative AI startups, helping them secure IP moats for the most valuable portions of the AI stack—particularly protecting innovations that address key bottlenecks with blocking IP rights.

The innovations at the model layer, as demonstrated by DeepSeek, represent just the beginning of the innovation cycle.[5] At GIQ, we anticipate many more breakthrough innovations from AI startups that will transform the AI marketplace at every level of the AI stack.

[1] Both contentions are being challenged. DeepSeek reportedly used a distillation process that lowered the costs of model development. This process is prohibited by model developers like OpenAI and Meta. With distillation, existing AI models (e.g. GPT-o1 and LLaMA) are repeatedly asked questions and the answers are used to develop new models. This shortcut results in models that roughly approximate state-of-the-art models but don’t cost nearly the same to produce. Additionally, DeepSeek may have used H100s (violating export restriction) and/or many 1000s of Hopper generation chips to train their models which would have added considerable infrastructure costs to the model training.

[2] Code repository is licensed under the MIT License. The use of DeepSeek-V3 Base/Chat models is subject to the Model License. DeepSeek-V3 series (including Base and Chat) supports commercial use.

[3] https://generativeiq.com/what-do-steam-engines-and-ai-chips-have-in-common-jevons-paradox/. We wrote about this economic principle in a blog post dated June 25, 20104, entitled “What do Steam Engines and AI Chips Have in Common: Jevons Paradox.”

[4] While this counter intuitive economic phenomenon is often rejected by the markets, there are countless examples where technological innovation spurred new market revolutions. For example, the advent of cloud services leading to the SAS revolution, iPhone adoption leading to the revolution of mobile computing, microprocessors leading to the revolution of personal computing, etc.

[5] https://generativeiq.com/ai-innovations-will-lead-to-a-tsunami-of-ip-rights/. We wrote about this very innovation cycle beginning at the model layer a year ago. We predicted a wave of innovations beginning at the model layer kicking off the ” innovation stage.” See our blog post entitled “AI Innovations will Lead to a Tsunami of IP Rights. January 19, 2024.