The Future of AI Compute Depends on Light

Traditional silicon capacity limits are driving a need for innovative technologies and architectures for AI computing. In this blog, we discuss how optical computing using light-based solutions may provide near term solutions to these limitations and how these innovations are driving new opportunities for blocking IP rights.

Background

The World Economic Forum estimates that the energy required for AI compute will increase 50% each year through 2030[1]. Hyper-scalers are looking for ways to produce more energy to feed the demand, including nuclear and fusion energy production. Exponentially growing use cases for AI and the push towards artificial general intelligence (AGI) are forcing industry to urgently look for lower energy and higher performance solutions.

Current headlines, reports, and articles consistently identify two common limitations that available solutions face in meeting the growing demand for compute.

- Unsustainable growth in energy infrastructure required for AI could limit progress, and

- Higher AI chip processing performance will always be in demand.[2][3][4]

According to Jevons Paradox, as the cost of compute continues to fall, demand for AI compute will continue to increase due to ever increasing AI use cases enabled by AI applications[5]. Demand for AI compute is increasing at least 11 times a year while GPU performance improvements are only increasing 1.3 times a year [6][7]. Data center demand is outstripping supply of high-performance GPU chips and power grid capacity. Yet, data center development is predicted to reach $1 trillion spend per year by 2028[8]. New chip architectures are required to meet this ever-growing demand, improve performance, and reduce power needs to perpetuate the Jevons Paradox flywheel effect[9].

Limitation of the Silicon GPU

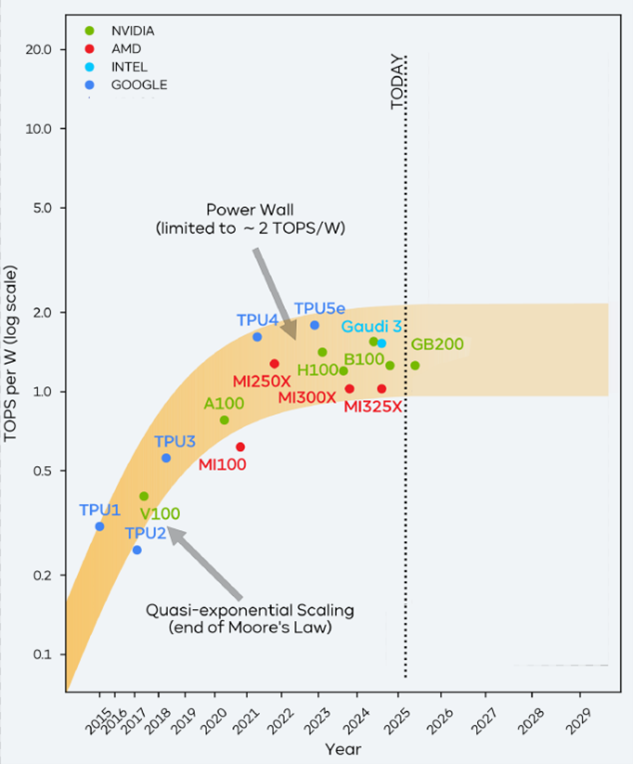

Current silicon GPU designs are reaching the end of their performance improvements. Moore’s Law describes the trend where the number of transistors on a chip roughly doubles every two years, doubling performance. However, these performance improvements are no longer sustainable due to limits in reducing transistor and interconnect size. Thermal cost increases (liquid cooling, power and more) and speed limitations (due to the shrinking geometries) with transferring electrons throughout the chip/system further restrict their growth potential.

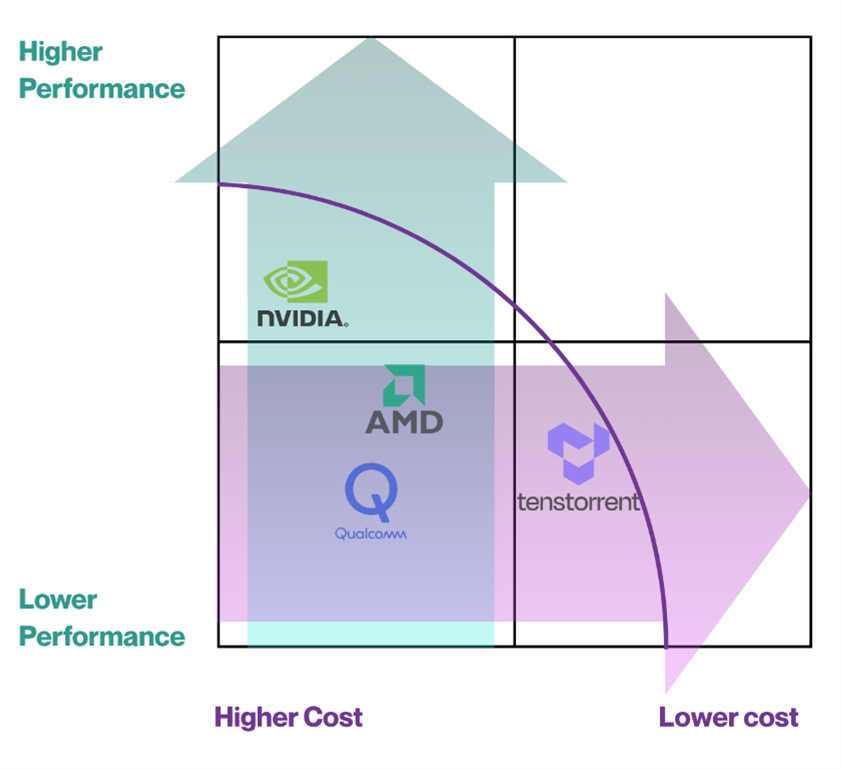

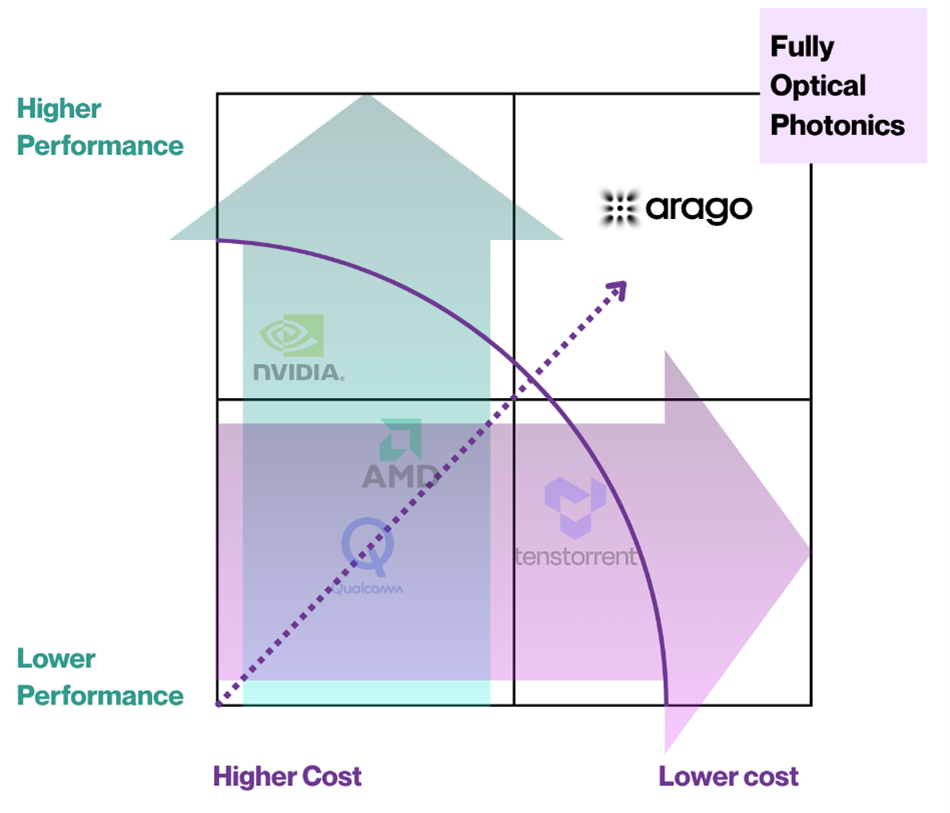

Figure 1

Figure 1 displays examples of GPU chip suppliers based on the performance and cost of their chips (both energy and chip prices). For example, Nvidia is in the upper left quadrant because of the high cost to cool and run and the purchase price of its highly performant processors. The purple curve depicts the performance and cost limitations of these silicon-based GPU processors. Innovative processors with higher performance and lower cost (upper right quadrant) will be required to meet the ever-growing demand for processors in the AI marketplace.

Figure 2

Figure 2 illustrates how the performance of silicon-based chips has flattened over time, explaining the purple curve which limits performance and cost improvements in Figure 1.

Next Generation Processors Enabled by Optical Computing

To address these limitations, various companies are developing innovations like photonics-based designs. To reduce interconnect power and improve communication efficiency between chips, a company called Lightmatter [www.Lightmatter.com] introduced photonic-based interconnects to use light for communications rather than electrons. In addition, to reduce power and improve efficiency for computing on the chip itself, a company called Arago [www.Arago.inc] introduced a photonics processor that uses light for computing rather than electrons. This approach overcomes the challenges of transistor geometry reductions and provides an overall thermal improvement for computing, especially for artificial intelligence (AI) computations.

Figure 3

Light enables parallelism and scalability without generating expensive thermal costs associated with today’s silicon-based processors. This approach has shown to enable higher performance processors at lower costs that fall into the upper right quadrant, shown in Figure 3. With more components enabled optically, the higher the performance gets at lower costs, approaching the top right corner of the graph.

Innovation Cycle Enabling Photonic Optical Computing Driving Blocking IP Rights.

Breaking through the performance/cost boundary for processors is one of the most significant challenges in AI computing. The industry will continue spending billions of dollars innovating processors to meet ever-growing demand. Some of these innovations (e.g. optical computing using photonics, quantum computing, etc.) may likely enable fundamental technological breakthroughs that provide the building blocks for future processing for the AI industry. These innovations will likely provide opportunities for obtaining blocking IP rights. GIQ will be following these developments closely and provide further insights as they occur.

By Bob Steinberg, Fred Steinberg, and Calki Garcia

Edited By Sophie Steinberg and Jacob Levine

Bob Steinberg is the Founder of GenerativeIQ® LLC, a venture fund that provides capital to early-stage IP rich AI start-ups. He has been protecting and litigating IP rights, working with technology entities and entrepreneurs navigating the IP landscape and monetizing blocking rights for over 30 years.

Fred Steinberg the senior technology analyst at GenerativeIQ® LLC, former Senior Director of Engineering for Intel Corporation, drove numerous IP and product designs in the areas of 3D, Media, and Display for the PC and discrete graphics markets including the Microsoft Surface Pro and Intel Core products.

Calki Garcia is a design strategist and research intern at GenerativeIQ® LLC, contributing research and insight on emerging AI technologies, market trends, and startup ecosystems. He contributed to this piece as part of GIQ’s ongoing analysis of the AI investment landscape.

[3]https://www.weforum.org/stories/2024/07/generative-ai-energy-emissions/

[4]https://www.weforum.org/stories/2025/07/ai-infrastructure-sustainable-future-energy/

[5]https://generativeiq.com/what-do-steam-engines-and-ai-chips-have-in-common-jevons-paradox/

[6]https://openai.com/index/ai-and-compute

[7]https://epoch.ai/blog/trends-in-gpu-price-performance