Nvidia’s $20B Groq Acqui-hire: Why IP Moats Set the Price

In our recent blog post, dated January 26, 2026, we discussed Groq’s announcement that it had entered into a non-exclusive licensing agreement with Nvidia for Groq’s inference technology.[1] Groq also stated that Jonathan Ross (Groq’s Founder and an inventor listed on many of Groq’s patents), Sunny Madra (Groq’s President), and other Groq team members will join Nvidia to “advance and scale the licensed technology,” while Groq continues “independently” under CEO Simon Edwards.[2]

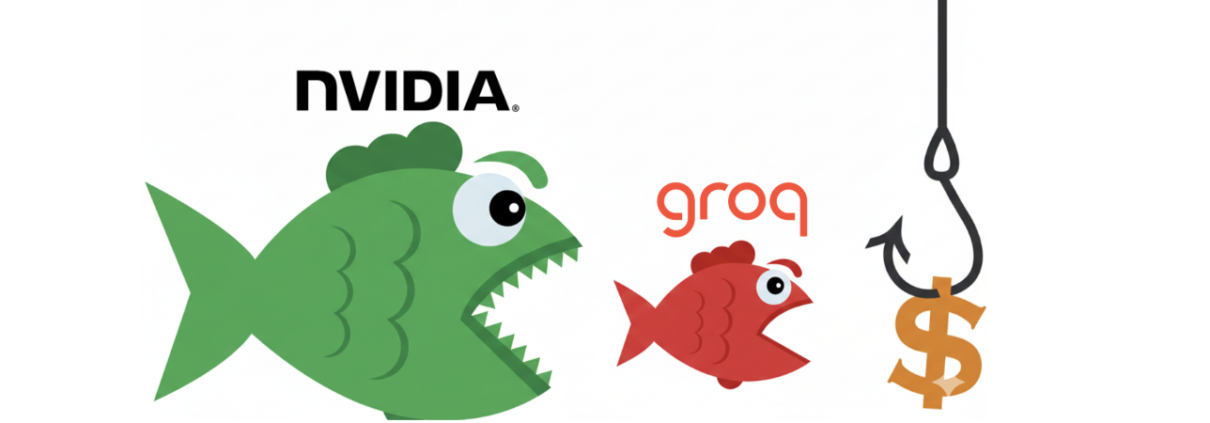

Media coverage framed this as a mega-sized deal, but also noted Nvidia’s position that this was not a straightforward purchase of Groq as a company.[3] Online commentary, however, reflects a growing understanding that this deal may be thought of as a functional acquisition in many respects.[4] What many are missing, however, is why Groq was able to command such a high price. The answer is blocking IP rights valued highly within an in-demand niche of a growing sector: AI inference. Inference is the process of using already-trained models to make predictions.

While the terms of the IP license can be characterized as “non-exclusive,” when paired with the “aqcui-hire” of several top executives to oversee Nvidia’s platform integration with Groq’s IP, the outcome is very valuable to Nvidia because it enables Nvidia to extend its already-dominant GPU. Nvidia needed to extend its GPU platform into more efficient, higher performing inference capabilities given growing demand for inference and concerns that its GPUs could be outperformed by the competition.[5] Nvidia’s GPU architecture has inherent data flow bottlenecks making them less efficient for inference compared with machine learning.

Why Groq’s IP Matters to Nvidia

One of the most useful ways to understand Groq (and the advantage that its IP brings to the table) is to stop thinking about just improving chip architectures and start thinking about a full-AI stack approach, adding higher layer compiler technology to enable more efficient scheduling instruction execution for inference.[6]

Nvidia already has the dominant GPU for machine learning purposes, but the inference market is increasingly seen as fragmented among multiple architectures and competitors. Nvidia’s dominance becomes stronger if it can extend from pure hardware GPU performance to system-level advantages including inference.[7] This is reminiscent of Apple’s lock-in strategy across a consumer tech ecosystem,[8] but in AI infrastructure terms. If the best inference technology is “native” inside the Nvidia stack, customer inertia increases and competitors must beat not only a GPU chip, but a platform that also enables efficient inference

That’s where Groq comes in: with Groq’s IP, Nvidia adds a compiler layer and software- approach to accelerating inference by pushing bottlenecks and complexity out of a “hardware-only” approach and into software: including instruction scheduling, explicit data movement, spatial mapping of models to silicon, predictable execution and others.[9]

This matters because inference performance at scale is frequently bottlenecked by realities that general-purpose GPUs don’t solve for. Groq’s value proposition is not measured just by Trillions of Operations Per Second (TOPS). It is deterministic, scheduled execution of processes, where the system is engineered so that the compiler can “see” and optimize the full computation plan, data movement is explicit and planned, execution is predictable enough to reduce latency variance, and the hardware is used more efficiently by removing stalls/bottlenecks.[10]

That said, all the intricacies of the technology are not necessary to learn to understand the key takeaway here: “system advantages” are real, and they can be protected by IP moats. Especially in a fast-moving, cash-saturated AI industry, time is of the essence and licensing or acquiring key IP rights is the best way for frontier companies to stay competitive. Therefore, owning those rights by AI startups sets high-potential companies and investors on pace for super unicorn results.

Conclusion

The functional outcome of the Nvidia-Groq deal is clear: Nvidia secured the right to use Groq’s inference technology, and it also brought in the engineers best positioned to operationalize that technology tightly integrated inside Nvidia’s existing stack. At the same time, the $20B price tag signals that, even without a traditional acquisition, Groq’s IP and know-how were valuable enough to help command an acquisition-priced valuation.

Although Groq was not seeking a sale, its strong IP forced Nvidia to license the technology, giving Groq significant leverage in the deal. This dynamic reinforces the core thesis of our prior posts. When a startup owns IP moats around a strategically important technical wedge (here, inference acceleration by instruction scheduling, memory/data-movement efficiencies, and system-level determinism), an incumbent cannot simply “hire around” the innovation or reimplement it. The incumbent must negotiate for access to blocking IP rights, which become a primary driver of exit economics.

For founders and investors in AI frontier companies, the takeaway is pragmatic: strong IP moats don’t just protect innovation, they set the price.

By Bob Steinberg, Jacob Levine

Bob Steinberg is the Founder of Generative IQ® LLC, a venture fund that provides capital to early-stage IP rich AI start-ups. He has been protecting and litigating IP rights, working with technology entities and entrepreneurs navigating the IP landscape and monetizing blocking rights for over 30 years.

Jacob Levine is a J.D. Candidate 2027 at Harvard Law School where he serves as a Project Director for the Harvard Law Entrepreneurship Project. He graduated from American University in 2022 in the Global Scholars Program with dual degrees in Computer Science and International Studies. Jacob enjoys playing basketball and jazz music, woodworking, weight training, and reading. He loves to go on backpacking excursions with family and friends and attend concerts.

[1]https://generativeiq.com/nvidias-20b-groq-deal-how-ip-moats-commanded-the-highest-acqui-hire-price-ever/

[2] https://groq.com/newsroom/groq-and-nvidia-enter-non-exclusive-inference-technology-licensing-agreement-to-accelerate-ai-inference-at-global-scale.

[3] See e.g.,https://www.cnbc.com/2025/12/24/nvidia-buying-ai-chip-startup-groq-for-about-20-billion-biggest-deal.html.

[4]Seee.g.,https://intuitionlabs.ai/articles/nvidia-groq-ai-inference-deal; https://www.eetimes.com/what-is-groq-nvidia-deal-really-about/.

[5] https://finance.yahoo.com/news/microsoft-releases-powerful-ai-chip-201716391.html?guccounter=1

[6] https://finance.yahoo.com/news/nvidia-groq-deal-meet-other-181038844.html.

[7] https://venturebeat.com/infrastructure/inference-is-splitting-in-two-nvidias-usd20b-groq-bet-explains-its-next-act.

[8] https://www.forbes.com/sites/jump/2012/11/12/take-a-lesson-from-apple-a-strategy-to-keep-customers-in-your-ecosystem/.

[9]https://console.groq.com/docs/tool-use/built-in-tools/visit-website; https://www.fierce-network.com/cloud/heres-why-nvidia-dropping-20b-groqs-ai-tech.

[10] https://groq.com/blog/inside-the-lpu-deconstructing-groq-speed.