What do Steam Engines and AI Chips Have in Common: Jevons Paradox

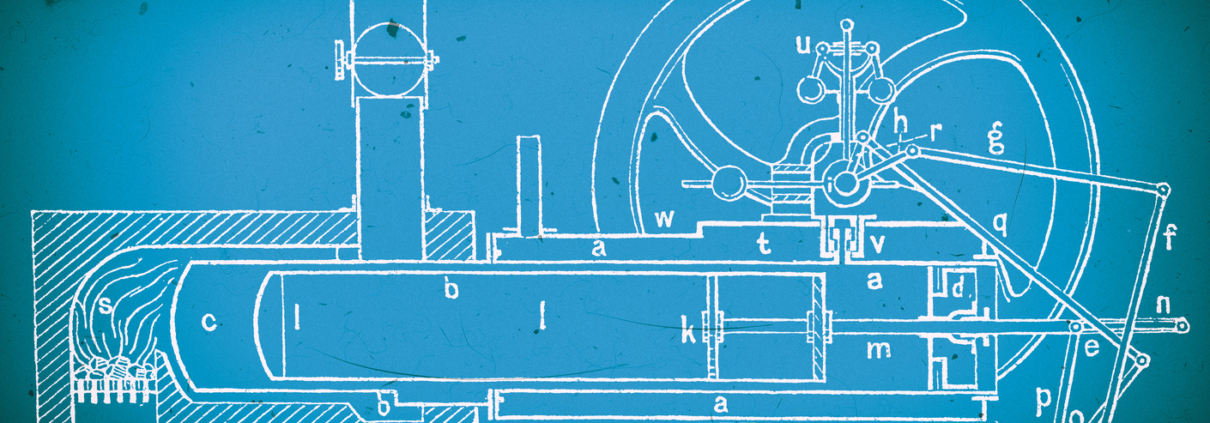

During the 1760’s, innovations such as James Watt’s steam engine redesign greatly improved the efficiency of coal use. Many economists predicted that these improvements would reduce the overall demand for coal, eventually reducing its price. One economist, William Stanley Jevons, garnered notoriety for controversially predicting that the demand for coal would increase in the long term.

Jevons was correct. When innovations occur around existing bottlenecks, their rate of consumption also increases, thus creating “Jevons Paradox.” Even though the steam engine’s innovations made coal use more efficient, it did not result in reduced coal consumption. Instead, it gave coal new applications and thus substantially drove up its consumption, demand, and monetary value. This event was the catalyst for the Industrial Revolution of the 1800s. Jevons Paradox is applicable to every technological cycle, including that of AI.

In the age of generative AI, the “demand for compute” for training and running AI models and applications could be considered equivalent to the demand for coal in the early days of the 1800s Industrial Revolution. Just like coal in steam engines, the demand for compute is related to the infrastructure that enables generative AI to function. Rather than having only one primary input to the steam engine–the availability and efficiency of coal–there are many more inputs for generative AI, including the availability and efficiency of processors (e.g. GPUs), cloud services and data centers, energy, bandwidth, cooling systems and many others (AI Infrastructure)¹.

The recent development of large language models (LLMs) by the largest industry players, academia, and the open source community has substantially increased the demand for compute, especially for machine learning necessary for enabling LLMs ². As these LLMs become more available to developers at marginal costs, new applications will emerge that can increase efficiency in virtually every existing industry–from law, to medicine, to computer science, to finance, and so many others–or even perform entirely new tasks, such as being the brain of an autonomous humanoid. This occurs because improved efficiency lowers the relative cost of using a resource (cost of compute), enabling more use cases for AI applications which increases the demand for compute. This accelerating “flywheel effect” produces the opportunity for the next Industrial Revolution by improving the efficiency of every component (e.g. GPU) that makes up the AI Infrastructure.

Those who build innovations to the AI Infrastructure that improve AI efficiency may not only create products which will continuously accrue demand and value. These inventors also have the opportunity to obtain the most fundamental blocking rights which will inform the Generative AI Industrial Revolution of the 21st century (e.g. James Watt’s 19th century steam engine patent).

For example, one of GenerativeIQ®’s (GIQ’®s) portfolio companies, called Arago Inc., invented a new photonics processor that substantially accelerates and also makes more efficient traditional methods of computation needed for machine learning and inference. Because components like these photonics chips are more efficient than existing GPUs, LLMs and the applications that run on them will also be more efficient, increasing their use cases. This in turn will cause greater demand for all levels of the AI Infrastructure. As AI becomes more energy and cost efficient, we can expect its adoption to sky rocket even more than it already has, leading to further innovations in the AI Infrastructure and demand for the components that make it up.

GIQ® is searching for AI startups with new innovations in AI Infrastructure because they will be the drivers of exponential growth in the AI industry. Unlike the 1800s Industrial Revolution, the AI Industrial Revolution has countless ways of innovating all of the levels that make up the AI Infrastructure stack. In the short term micro level, companies will race for access to these new technologies and buy them in large quantities (e.g. Nvidia’s GPU chips). On the macro level, innovations incentivize AI adoption across multiple industries, generating profits, new opportunities for adoption and for everyone to participate. In the longer term, the largest incumbents will attempt to commoditize these new technologies by integrating them into their platforms in order to level the playing field against their competitors. As in past technological cycles, the AI startups that have effective IP strategies and obtain blocking rights will have moats high enough to thwart these inevitable attempts to commoditize their new technologies ³.

DISCLAIMER: The opinions expressed in this blog post are not provided as legal advice.

[1] See GIQ® Blog Post January 19, 2024, “AI Innovations will Lead to a Tsunami of IP Rights.

[2] See Benedict Evans, “Apple Intelligence and AI Maximalism” www.ben-evans.com (LLMs are becoming commodities sold at marginal costs and the question is what product you build on top).

[3] See GIQ® Blog Post May 13, 2024,” Blocking Patents Level the Playing Field.”

By Bob Steinberg